Hello,

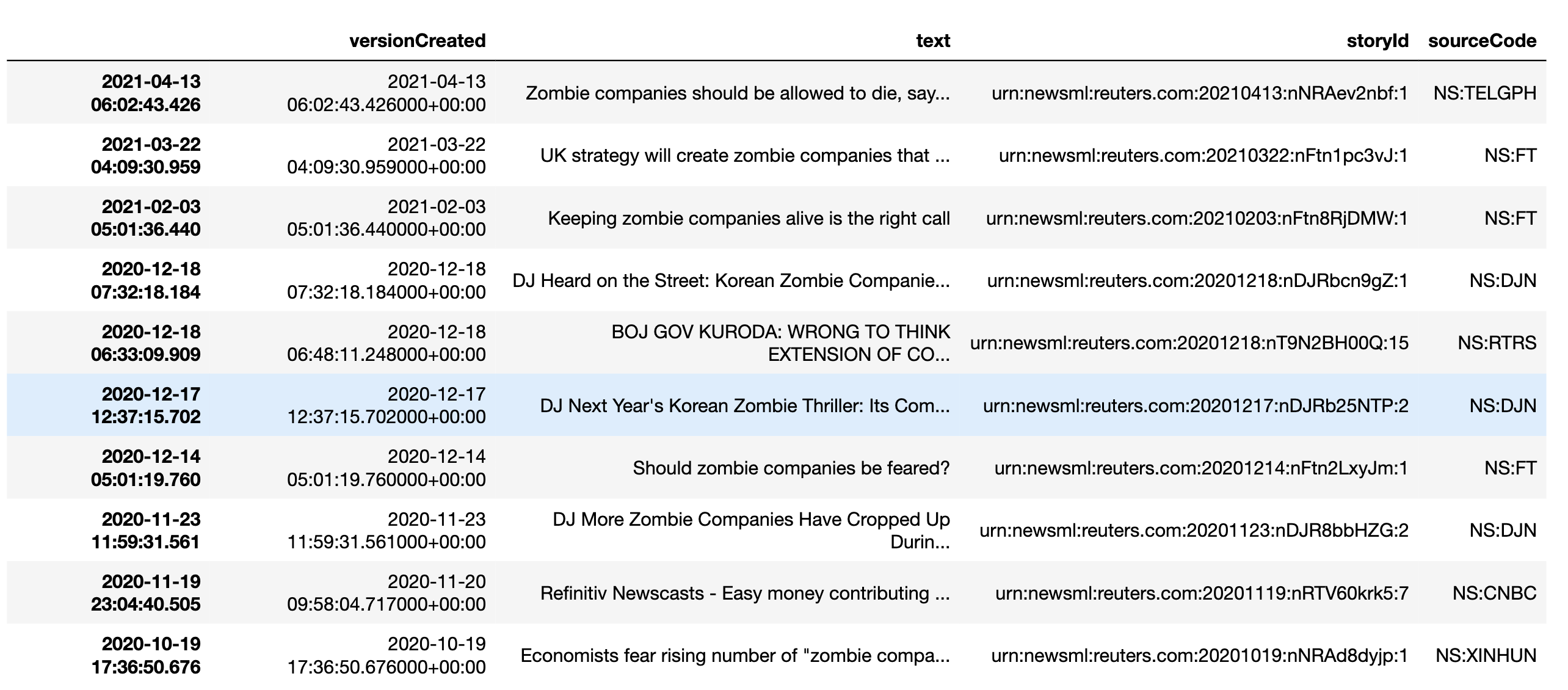

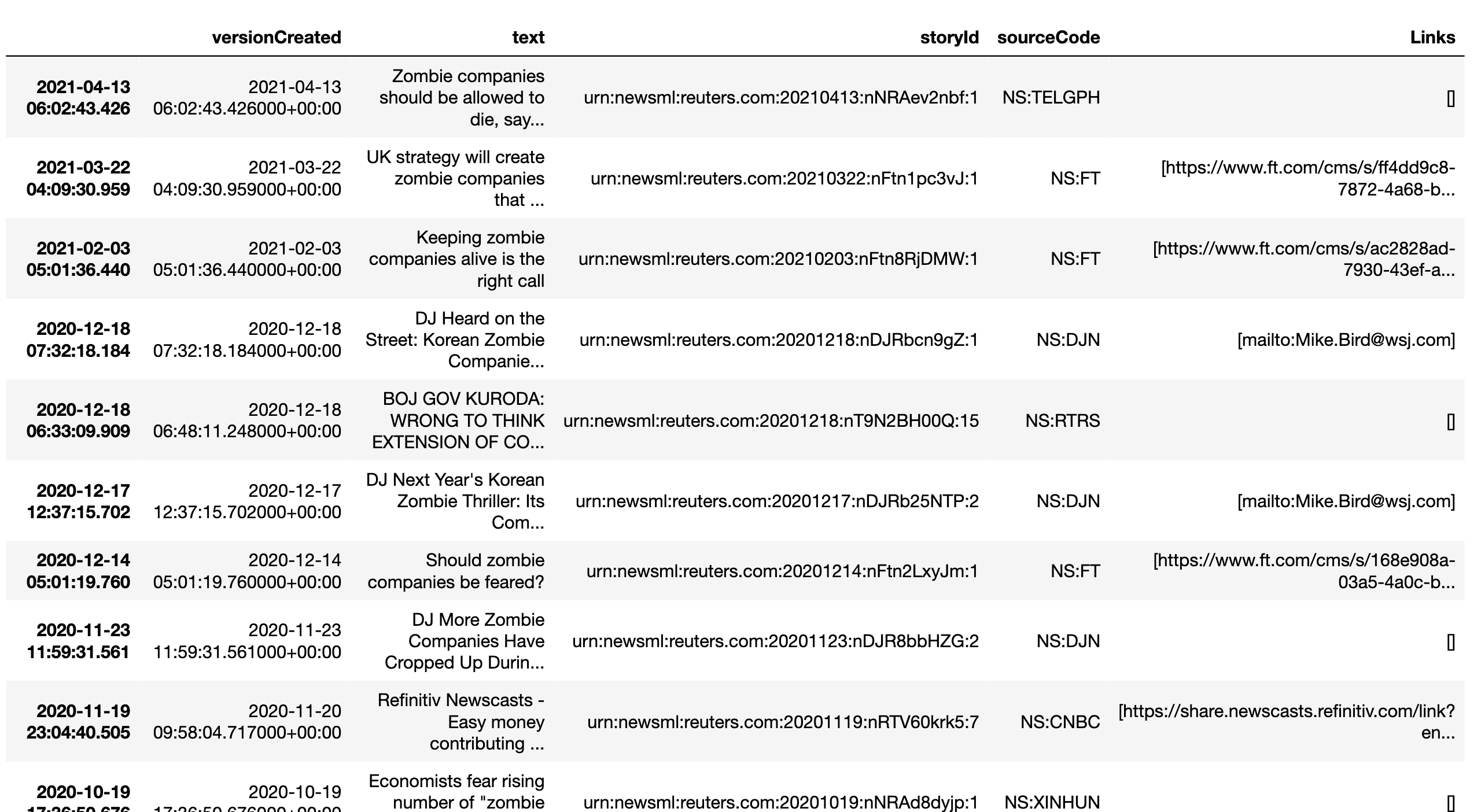

I am trying to find a way if I can get Web URL from a URN or story ID that is available inside EIKON.

For example there was news dated today 18 May 2021 with headline

"Euro zone inadvertently supported zombie firms, ECB finds"

The above news headline has a story id/urn :"urn:newsml:reuters.com:20210518:nlXXXXXXXX:3" & similarly the news is available on its webpage with link as

https://www.reuters.com/article/us-ecb-policy-zombies-idUSKCN2CZ0QP

In the above case what is the way through EIKON API I can get the relevant URL, so that the same news can be read via webpage. Please assist.